OK, folks, please bear with me as I go on a bit of a rant about statistics and using data to basically lie (and end with a rant about why this might be happening).

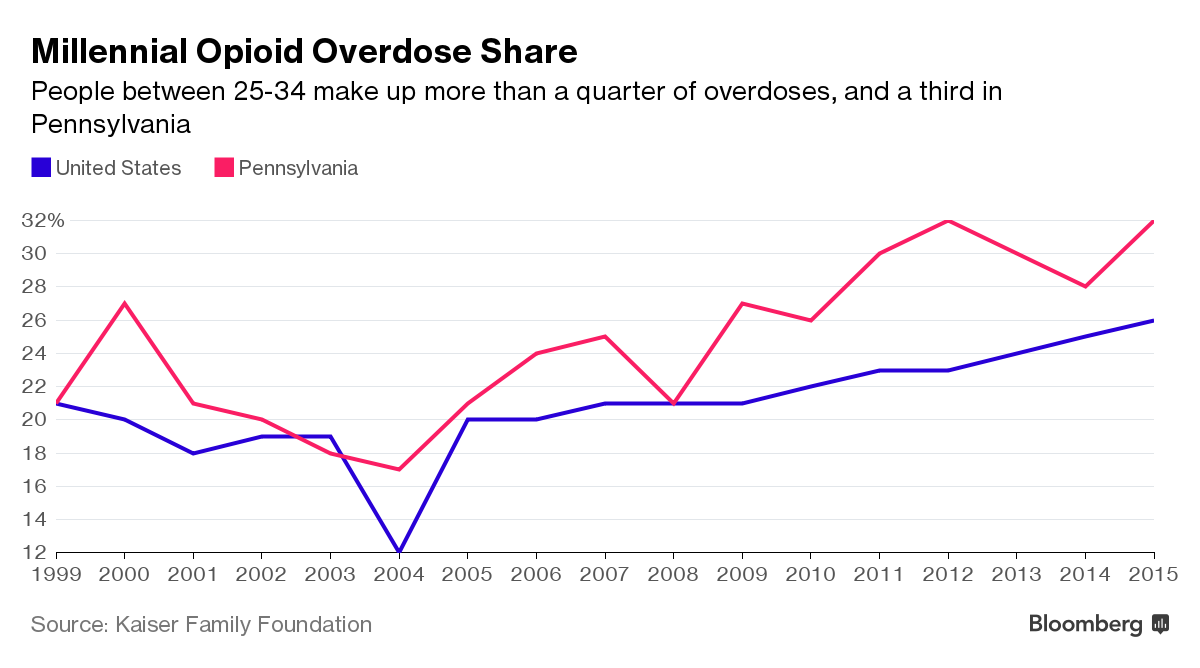

I had been reading an article entitled Young White America Is Haunted by a Crisis of Despair and happened upon a graph. It wasn’t a remarkable graph, except something about it just didn’t make sense to me, and then I looked at the axis (which runs from 12% through 32%) and I began to question the data.

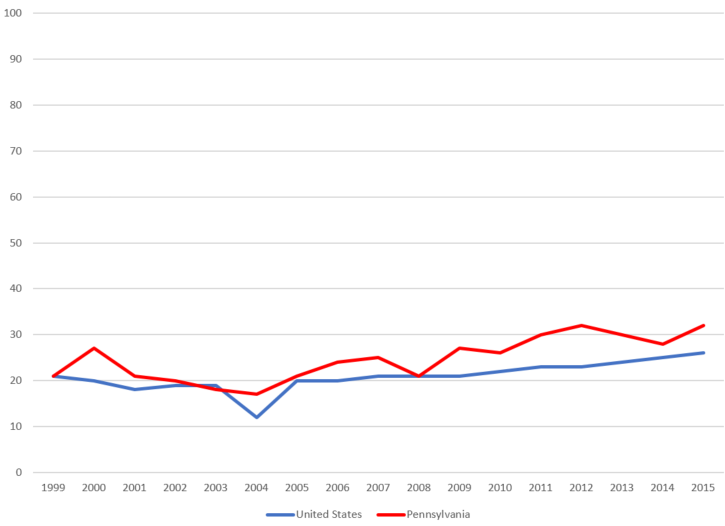

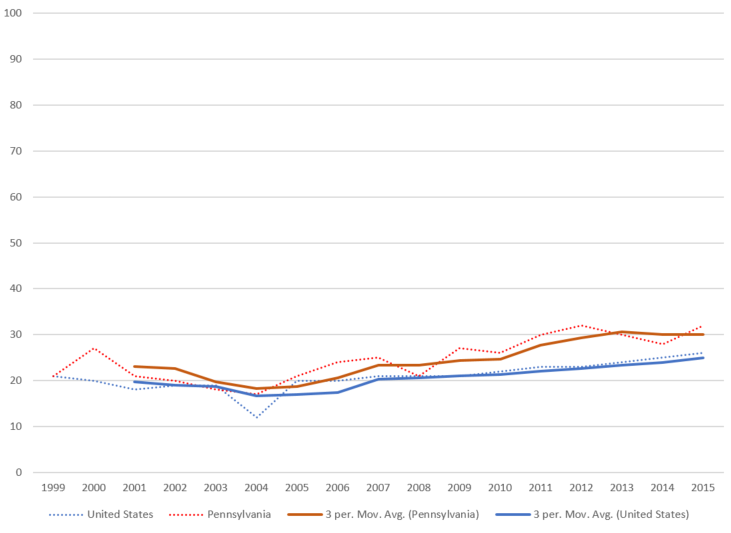

People put things on a truncated axis like this when they want to emphasize the data more than it actually warrants. OK. So, we can go through and type this into Excel and make up a bit of a graph to show us what it looks like on a more accurate axis.

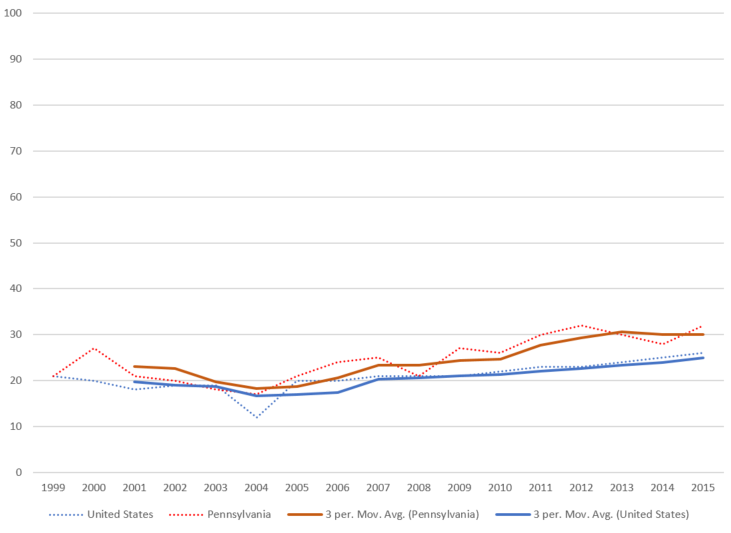

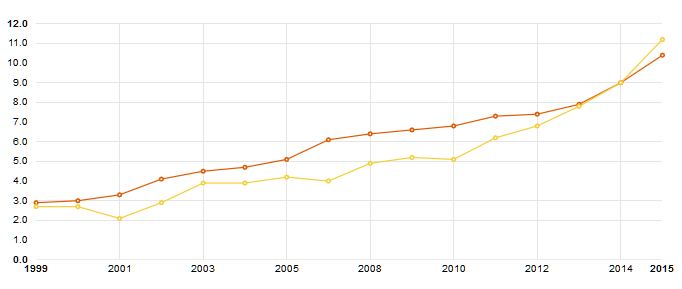

OK, that lets us see the data a bit better (i.e., that there’s not much of a difference between Pennsylvania and the rest of the USA). But it still doesn’t tell us anything – I mean, there must be a reason the writer is all het up about whatever’s happening in Pennsylvania, right? So, let’s slap a trend line on things and see where they’re going – let’s put a 3-year moving average on both the US and Pennsylvania’s percentages and see if they’re radically different from one another.

OK, so, that one shows us the raw numbers as dotted lines and the moving average as solid lines. Yes, it does look as if Pennsylvania seems to be edging up. But this is weird, really, because we really don’t understand what we’re looking at: we’re looking at an arbitrary slice of the population (ages 25-34) across 16 years. So … what does this really tell us? Well, without knowing how the other age bands behave, we won’t know anything at all. So, let’s go see what the Kaiser Foundation tells us about that. Kaiser Foundation data pretty much says that, yes, this age range for Pennsylvania does, indeed, overdose on opioids more often … but it also says that ages 35 and above overdose on opioids far less frequently than the US population (which could be saying that people in Pennsylvania overdose earlier than the rest of the country, but at about the same rate).

So, is Pennsylvania doing worse than the rest of the country? Well, Kaiser will let us troll through the data, so let’s see if we can put together a chart to tell us just that. If we ask for non-age data (e.g. by going to Opioid Overdose Deaths by Gender and removing Gender) then we see something that paints a different picture entirely.

Looking at the data without including age as a factor, Pennsylvania has historically been a pretty decent place to be, in that their opioid overdose deaths have tended to be several percentage points lower than the national average. This has stopped being true as of last year … except that how do we know that there’s a trend, rather than this just being noise?

Also, there’s one thing that’s missing from all of this analysis: how do we know that it has anything whatsoever to do with race? Because, really, we haven’t had anything that shows race at all in here, and when I try to ask Kaiser about their data by Race / Ethnicity I get some interesting results … in that I get a handful of data that is “NSD: Not sufficient data. Data supressed to ensure confidentiality.” Try it yourself and see what you can make of it.

That last query brings up another problem in the article: the article says that the deaths of a particular age range and ethnic group are different from the same age range for different ethnic groups … but that data doesn’t appear to be readily available, nor does any of the data out there seem to support that, nor does any of this appear to be beyond the range of statistical noise.

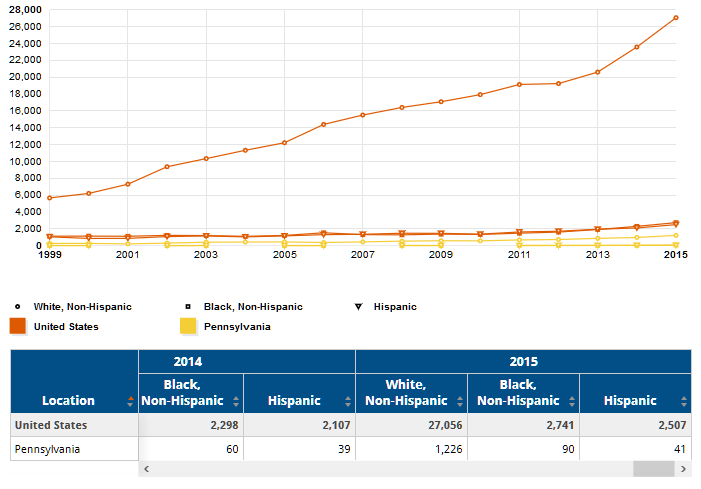

Now, what happens if we ask for numbers rather than percentages?

The top line is the total US number, keep in mind. But, yes, there does appear to be a wee problem there in Pennsylvania among white people. Of course, we don’t know how many black or Hispanic people there are in Pennsylvania, so we can’t say what percentage is affected by “the opioid epidemic,” and this is particularly true because when you look at the trend of Pennsylvania it kind of seems like it’s rising very slowly, whereas there’s a jump beginning at 2013 for the national average. So, does Pennsylvania have a problem? We just cannot say. (We also haven’t looked at when the stronger variants of opioids came onto the market, but do know that those are having some effect, as people encounter drugs which are stronger than expected.)

The other thing to point out: we’re talking about some pretty small numbers all along. I’m sorry, but 27,000 white people dying in a year, out of a total population of 320 million people, just doesn’t seem like a huge thing to talk about, to be honest. I mean, sure, it’s a tragedy for the families involved. But how about the 614,348 people who died from heart disease in the same year? The 591,699 who died of cancer? The 147,101 who died of respiratory disease? Or 136,053 from accidents, 133,033 from strokes, 93,541 from alzheimer’s, 76,488 from diabetes, 55,227 from influenza, 48,146 from kidney disease, and 42,773 from suicide?

In case you missed it in those numbers (all of which are significantly greater than the number of opioid deaths): 55,227 Americans died from the flu last year, which is nearly twice the number who died from opioids.

All of this leads me to wonder: why do we place such importance upon this particular narrative? Why is it important to tell the story that poor white people who didn’t get college degrees are getting into heroin? Why are we, as a nation, accepting articles like this one, which are barely supportable by the statistics, and which are looking at a problem which, on the face of it, doesn’t seem to be a significant problem when compared to other things? (As an example: give everybody a flu shot and you’ve likely saved as many as overdose on opioids.)

I would like to have full access to the data set, to see if I can slice it and come up with something compelling about Pennsylvania White People, but I just can’t see it in the available data. What I do see is a story being told without the data to support it, and I wonder why it’s an important story to the nation, at this time.

My answer to that question? We’ve seen that the war on drugs does not work, and this is a narrative which can be accepted (by White People) as a reason to change our national strategy. Spinning the story this way, though, ignores the generations of minorities who have been incarcerated for drug offenses. It lets White People continue to think that mass incarceration of minorities for drug crimes was OK. Making “the opioid epidemic” about Poor Whites allows a change in drug policy without addressing racial injustice.

-D