High summer, and we are among the living.

It’s it’s hard to believe how much has changed, when it seems that staying so close to home, so little does.

We took pictures of the yard when it was flat dirt, and when Himself was tilling and planting – but the pictures don’t do it justice. There’s nothing that can describe dead ground suddenly becoming alive… a sun-baked stretch of clay becoming a DIY meadow for dragonflies, butterflies, a league of lizards, two nesting pair of mockingbirds, a scrub jay, countless goldfinches and house finches and all the pollinators – at least three types of bees, by striping pattern, among numerous others, including tiny, jewel-green flies (whose ironic common usename is Green Jewel Flies. Can’t make this stuff up). Despite the clay soil and the fox leaving calling cards early on, somehow, this is the best garden, yet. We have giant zinnias. We have giant marigolds. We have …color and life and birds swooping around, and the odd tiny kestrel come calling, the ubiquitous crows, as well as the hooting of owls at night.

We are still here – and how are you?

We didn’t start out with the idea for a DIY meadow. We just knew we wanted… something to see. The entire back wall of the house is dedicated to windows, and we needed something other than flat ground and dirt to look at, as year turned. The extra rain we had this past winter really encouraged us to take a chance and drain the little pond that had settled itself into the center of the old fire pit – and T’s family giving her gardening supplies for her birthday in March sealed the deal. She received seeds she would have never otherwise purchased or tried growing. Just flinging the seed out there onto the newly tilled ground and hoping for the best made a big difference.

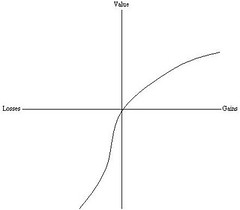

A study from the University of Colorado (funded by the American Cancer Society) published in January 2023 in the journal Lancet Planetary Health found that people who started gardening saw their stress and anxiety levels decrease significantly. This wasn’t WHY we started gardening, but it’s been a definite, positive by-product. This was a stressful winter workwise for Himself, with a lot of political shenanigans and nonsense going on. (Ironic that even working for yourself can be political.) Work hits its ebbs and flows, so there was less work with more annoying people. Odd how that works. T meanwhile slogged through finishing a novel that she didn’t want to write (but was under contract for). Dreading one’s work made it (at first) much harder work than she expected, and that took a lot out of her. And then, the health outcomes she was dreading came to pass – the new biologic drug she’s on showed wearying signs of not working, and, worse, brought on the hemolytic anemia she had carefully worked to prevent for years. Staggering with exhausting (and wishing that weren’t literal), depressed and discouraged after the long winter and uncertain Spring, both T&D needed a win.

Which was where gardening came in. Gardening, friends, is an act of faith.

One must believe in the potential of this weird looking bit of woody …something. It’s dull and tiny and one must toss it in the dirt like detritus, and think, “Okay, we’re told you have all that you need inside of you to do your thing. Go.” And then one must wait. Five to seven days, ten days, fifteen, and that woody bit of nothing …transforms. It pokes up through the soil, completely changed into the likeness of a plant – a tiny bit of green, which, in a few more days produces true leaves which determine what it will be. An act of faith, the substance of which is hoped for, the evidence which is now seen. To quote Audrey Hepburn, to plant a garden is to believe in tomorrow.

The pandemic zeitgeist invented something else called… rage gardening. People were so tired and fed up with things in the world that sometimes the only thing they felt like doing was hitting something… so they went out and hit the ground with a pick axe. (THIS is contraindicated – those suckers are heavy and you could hurt your back, and then you’d feel so much worse…Start with a hoe.) Ripping out plants, flinging away rocks and chunks of clay, yanking out weeds and sharply cutting a hoe into recalcitrant soil has continued to have its uses. Especially for T, for whom holding a hose to water some days requires sitting down, beating on something until she is breathless is helpful. There’s very little that individuals can do to change things, yet we are subtly shamed and castigated for the warming planet, for the political situation, for the stupidity of book bans. There is very little that is our fault, yet there is a lot which is our responsibility, and sometimes the very little we can do to control the depth of the handbasket in which we are going to hell wages war with the choices we can make to turn the lives of our community in a positive direction. Sometimes, life is exhausting. There is so little we control – but how many weeds are growing next to your zinnias? That, you can manage.

Obviously, not everyone gardens in a temper – that sounds exhausting, to say the least – but ripping out things and turning soil has left us understandably exhausted. At least that chronic fatigue makes sense. At least in the garden, frustration can be a source of good, to give us space to process what we know, that bad times won’t last forever, that we’re being cradled, held, and looked after, even when it doesn’t feel like it. That joy comes in the morning.

Gardening then becomes a portable magic. Carried from the parent plant, seeds, via bird poop, wind, rodent digestion, or some intrepid gardener who glares at the squirrels and frets at the finches stealing “his” seeds (sound like anyone you know? Maybe????), these bits of the future go out into the world, not knowing where they’ll land. But, land they do, and they recreate themselves, reinventing themselves to fit where they need to be, over and over and over again.

“It is a greater act of faith to plant a bulb than to plant a tree . . . to see in these wizened, colourless shapes the subtle curves of the Iris reticulata or the tight locks of the hyacinth.” –Claire Leighton, Four Hedges

Gardening allows us, even briefly, to take some of that mute, unseeing, seed-like faith into ourselves – and to wait steadily and patiently for what’s inbuilt to do its job – giving us space to wait with grace for in another day, a solution, a new medication, an ending to crisis.

We planted two types of melon this year – neither of them remotely “normal,” because seed catalogs arrive the day after Christmas, when it is dark and one’s resistance is at a low ebb. (Well played, seed companies.) T was enticed into purchasing two heirloom-ish things she’d never even heard of, one a single-serve Tigger melon, which begins a deep, striped green, but which is a deep, striped orange when ripe, and the other, a mastodon-sized, orange-fleshed monster.

The largest watermelon is about four to six pounds already, which we consider shocking – we’ve never successfully grown decent melons without “help” from the deer in the from of either stepping on each one of them, or taking a bite – one single bite – from each, because grazing animals are sometimes complete dorks. TBH, we’re afraid to hope the Tigger and the Orange Crush or whatever its called – actually come ripe. So far, however, they’re doing their thing, and sending out distinctive leaf-shapes on sturdy vines to colonize the shady area beneath the bushes along the fence.

Meanwhile, on the far side of the house we have at last count twelve Georgia Roasters – a Comanche Nation heirloom variety of squash used in the Three Sisters planting method (the squash, maize, and pole beans thing) – six or eight delicata squash, and a handful of birdhouse gourds ongoing. (Why we chose to plant those next to each other is a short, dumb story – we had old, old gourd seeds and didn’t think they’d germinate. Joke’s on us as both birdhouse gourds and roasters will grow a ten foot vine FROM ONE SEED. The morning glories are climbing them, and the vining is almost visible if you stand still watching them long enough. The race is on to pull down the neighbor’s fence).

The roaster squash, relatives of butternut, are just HUGE so far, and we’re not even close to their full weight, which sometimes exceeds fifteen pounds EACH, and they exceed the length of a forearm. We are excited to have overwintering hard squashes – something we’ve also not ever tried to grow. We also have birdhouse gourds going – we did those once and they were fun. We made all these cute birdhouses and gave them away — and the one we kept, a windstorm blew down and shattered, disappointingly. With the 35 mph winds we had last winter which blew down the fence we’re going to be much more careful with these.

The season is waning – we are collecting seeds and already seeing the fading of the intense colors and the drying out of the vines as the squashes and gourds begin to ripen. We have harvested and collected seeds ready to unleash into the soil to create another shorter, intense flowering season before the El Niño rains promised/threatened come and soggify the soil into unresponsive clay lumps again. We’re hopeful that the next growing season will be easier – that the green compost we turn into the sandy, clay soil will attract more worms and rejuvenate what tends to be basically worthless. We’ve had an amazing season without knowing what we were up against, and now plan to turn rice hulls and other organic material into the soil in hopes of helping it do even better.

Having access to an outdoor space is a privilege, one we’re aware of, and grateful for. Our world is smaller and circumscribed, and as you might expect, living with restrictions as we do, due to T’s autoimmune disorder, is sometimes annoying. The world leaves those chronically unable to participate behind, ever well-meaning but inadvertently too fast, and expecting everyone to keep up. We as a society don’t do well with protracted anything. With chronic illness, there’s almost this sense of “aren’t you done with that YET??? Nope, we’re not, and we might not be, for many years or ever. Happiness is dealing with what you can and letting the rest go, however. We haven’t contracted Covid, by the grace of God. We haven’t had more than a passing stomach bug – no serious illnesses other than the one(s) already here. Part of “the rest” that we’ve let go has been the things and people we expose experience firsthand, where we go and what we do. We hope to continue to make adjustments and figure out to live within our restrictions. We hope to hear how you’re doing. We hope someday things change. But, until then, there’s the garden – and the internet – and the blessings of friends who send good wishes, which we cannot take for granted.

Hold onto those things, and don’t let go.